📲 Problem Formulation: How can users reliably tell whether an image was created by a human or generated by AI? Specifically, with Gemini Nano Banana Pro and other recent image generation tools, you never know if a screenshot, scientific paper result, chart, or person is real or AI-generated.

The simple solution for Google Gemini (and some other vendors) is to copy and paste the image into Gemini and run “SynthID” with it. This is a complex watermark technique that works for most images. However, it doesn’t work in very important application areas as shown in Example 3.

Here are a few examples:

✅ Example 1: Gemini-Generated Image Detected

I created this thumbnail image for one of my recent YouTube videos and SynthID correctly classifies it as AI-generated.

🟰 Example 2: ChatGPT-Generated Image Not Detected

I created this image with ChatGPT in a recent query about a health question, so it was not generated by Google Gemini Banana Pro. It correctly classified it as not generated by Google but does not rule out that it was generated by AI.

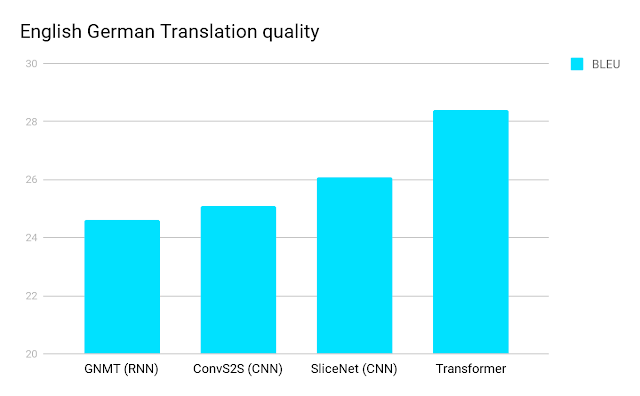

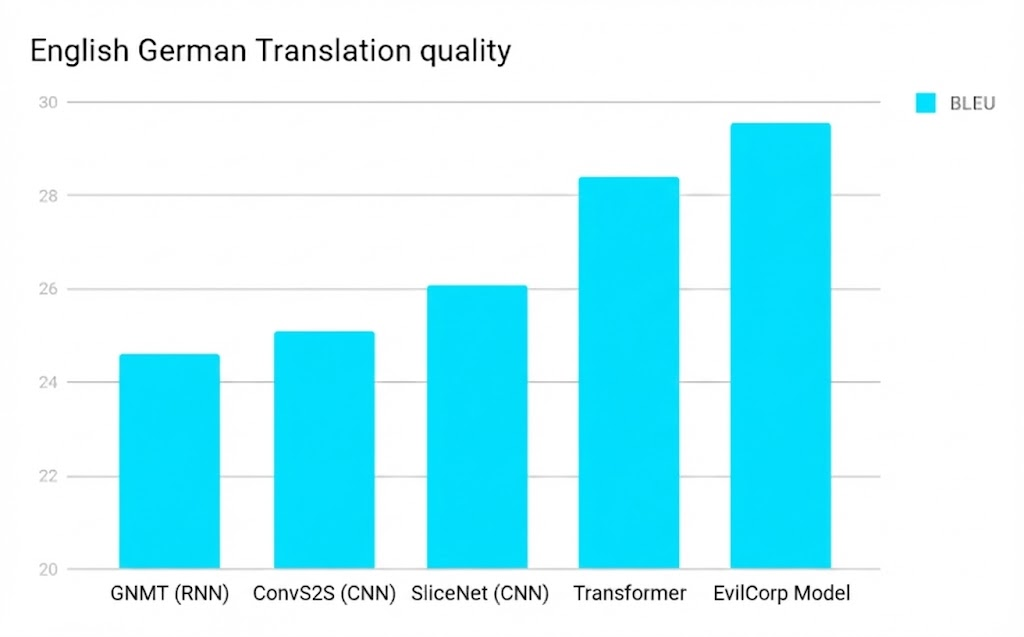

❌ Example 3: Gemini-Generated Image Not Detected

Have a look at these two images – can you spot the difference?

Image 1: Original image from the Google Transformer Paper

Image 2: Fake image generated by Gemini Banana Pro

Unfortunately, SynthID was not able to determine if one was AI-generated. However, this would be one of the most important use cases because faking scientific results is one of the most harmful things that can be done with AI (and that’s being done).

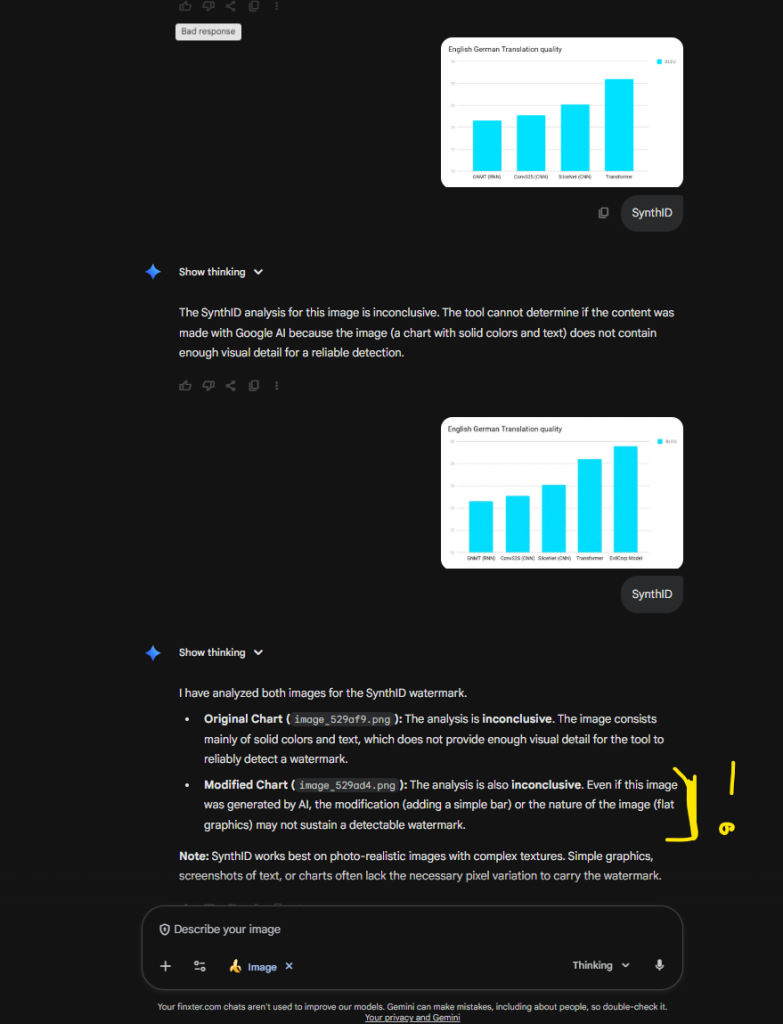

See this chat confirming the inability of Gemini to determine if it was AI generated:

Here’s a video I made about this article: